Heroine Warrior's, the original coded by Adam Williams (bow down to the man!) and

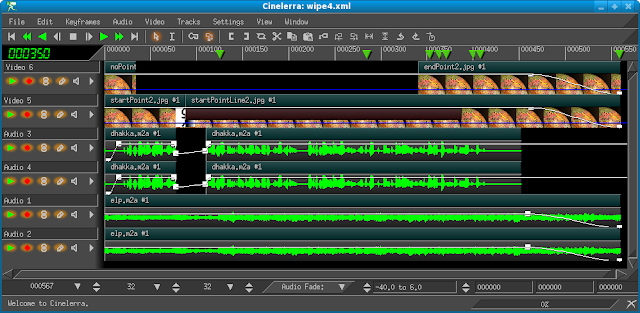

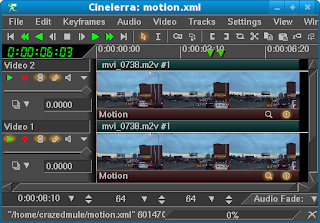

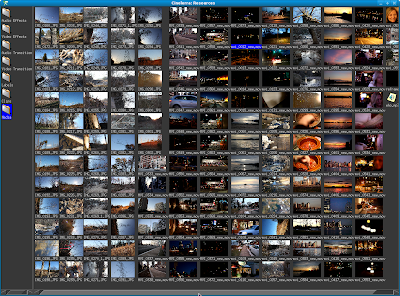

Cinelerra Community Version, Cinelerra CV

On my Fedora 10, x86-64 setup, I gave the latest HV Cinelerra 4 version a try. I encountered a bunch of hurdles, mainly the GCC 4.3 changes that caused missing header information in mjpegtools-1.9.0_rc3 listed here:

http://bugs.gentoo.org/show_bug.cgi?id=200767

I got the software to compile. At the final "make install" step, the installation starts, but does not complete with only the first 40 or so lines of the install finishing:

[mule@ogre bin]# make install

make -f build/Makefile.cinelerra install

make[1]: Entering directory `/usr/src/cinelerra-4'

make -C plugins install

make[2]: Entering directory `/usr/src/cinelerra-4/plugins'

mkdir -p ../bin/fonts

cp fonts/* ../bin/fonts

mkdir -p ../bin/shapes

cp shapes/* ../bin/shapes

cp ../thirdparty/mjpegtools*/mpeg2enc/mpeg2enc ../bin/mpeg2enc.plugin

make[2]: Leaving directory `/usr/src/cinelerra-4/plugins'

DST=../bin make -C libmpeg3 install

make[2]: Entering directory `/usr/src/cinelerra-4/libmpeg3'

cp x86_64/mpeg3dump x86_64/mpeg3peek x86_64/mpeg3toc

x86_64/mpeg3cat ../bin

make[2]: Leaving directory `/usr/src/cinelerra-4/libmpeg3'

make -C po install

make[2]: Entering directory `/usr/src/cinelerra-4/po'

mkdir -p ../bin/locale/de/LC_MESSAGES

cp sl.mo ../bin/locale/sl/LC_MESSAGES/cinelerra.mo

make[2]: Leaving directory `/usr/src/cinelerra-4/po'

make -C doc install

make[2]: Entering directory `/usr/src/cinelerra-4/doc'

mkdir -p ../bin/doc

cp arrow.png autokeyframe.png camera.png channel.png crop.png cut.png

expandpatch_checked.png eyedrop.png fitautos.png ibeam.png

left_justify.png magnify.png mask.png mutepatch_up.png paste.png

projector.png protect.png record.png recordpatch_up.png rewind.png

singleframe.png show_meters.png titlesafe.png toolwindow.png

top_justify.png wrench.png magnify.png ../bin/doc

cp: warning: source file `magnify.png' specified more than once

cp cinelerra.html ../bin/doc

make[2]: Leaving directory `/usr/src/cinelerra-4/doc'

cp COPYING README bin

make[1]: Leaving directory `/usr/src/cinelerra-4'

[mule@ogre bin]#

Therefore, the installation does not copy the cinelerra binary into /usr/local/bin. If I try to run the binary from the source code directory, I get this:

PluginServer::open_plugin: /usr/src/cinelerra-4/bin/brightness.plugin:

undefined symbol: glUseProgram

PluginServer::open_plugin: /usr/src/cinelerra-4/bin/deinterlace.plugin:

undefined symbol: glUseProgram

undefined symbol: glNormal3f

PluginServer::open_plugin: /usr/src/cinelerra-4/bin/swapchannels.plugin:

undefined symbol: glUseProgram

PluginServer::open_plugin: /usr/src/cinelerra-4/bin/threshold.plugin:

undefined symbol: glUseProgram

PluginServer::open_plugin: /usr/src/cinelerra-4/bin/zoomblur.plugin:

undefined symbol: glEnd

signal_entry: got SIGSEGV my pid=3965 execution table size=16:

awindowgui.C: create_objects: 433

awindowgui.C: create_objects: 440

awindowgui.C: create_objects: 444

awindowgui.C: create_objects: 447

awindowgui.C: create_objects: 453

suv.C: get_cwindow_sizes: 744

suv.C: get_cwindow_sizes: 774

suv.C: get_cwindow_sizes: 800

suv.C: get_cwindow_sizes: 821

editpanel.C: create_buttons: 177

editpanel.C: create_buttons: 303

editpanel.C: create_buttons: 177

editpanel.C: create_buttons: 303

mwindowgui.C: create_objects: 192

mwindowgui.C: create_objects: 195

mwindowgui.C: create_objects: 199

signal_entry: lock table size=6

0x357dc80 RemoveThread::input_lock RemoveThread::run

0x64d0370 CWindowTool::input_lock CWindowTool::run

0x64f3640 TransportQue::output_lock PlaybackEngine::run

0x3441940 TransportQue::output_lock PlaybackEngine::run

0x3442420 MainIndexes::input_lock MainIndexes::run 1

0x3442f80 Cinelerra: Program MWindow::init_gui *

BC_Signals::dump_buffers: buffer table size=0

BC_Signals::delete_temps: deleting 0 temp files

SigHandler::signal_handler total files=0

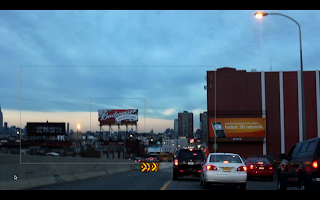

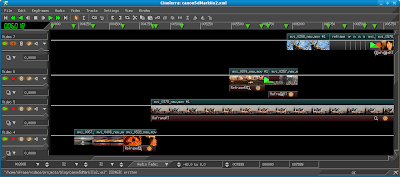

Even though I have an NVidia graphics card, the error lines above were related to OpenGL. Thus, I thought I might have better luck compiling without OpenGL enabled. After I removed those lines from from hvirtual_config.h, I did a make clean;make. This time around, I was able to get Cinelerra 4 to start properly. Though, it soon locks up when viewing my 720P MPEG-TS files:

[mule@ogre cinelerra-4]$ ./bin/cinelerra

Cinelerra 4 (C)2008 Adam Williams

Cinelerra is free software, covered by the GNU General Public License,

and you are welcome to change it and/or distribute copies of it under

certain conditions. There is absolutely no warranty for Cinelerra.

[mpeg2video @ 0xeafd00]slice mismatch

[mpeg2video @ 0xeafd00]mb incr damaged

[mpeg2video @ 0xeafd00]mb incr damaged

[mpeg2video @ 0xeafd00]invalid cbp at 14 37

[mpeg2video @ 0xeafd00]slice mismatch

[mpeg2video @ 0xeafd00]ac-tex damaged at 25 40

[mpeg2video @ 0xeafd00]ac-tex damaged at 6 41

[mpeg2video @ 0xeafd00]ac-tex damaged at 7 42

So much for that experiment! I'm going back to the CV version for now.

the mule