As I am planning on a purchase of a 1080p cam, I will need my system to be up on the latest and greatest kernal and software to get the highest performance from Cinelerra. In that light, I'd like to backup my current Fedora 7 boot and root filesystems, just in case something goes wrong with the Fedora 9 install.

Partimage and My System

I will use partimage to backup these filesystems. Partimage will need to see source and destination filesystems. My first task is to figure out what I have. I built this system over a year ago and don't remember all the specifics of which physical drive has x or y filesystems. I could go back into my notes to find out how I partitioned my system, but that would be cheating. So let's see what the filesystem tells me.

The first thing I do is look at the output of df:

[mule@ogre ~]# df -m

Filesystem 1M-blocks Used Available Use% Mounted on

/dev/md0 457295 6720 426972 2% /

/dev/md2 469453 417004 28602 94% /mnt/videos

/dev/sda1 99 19 76 20% /boot

tmpfs 1007 0 1007 0% /dev/shm

I have two RAID devices, one mounted as my root partition (/dev/md0) and one mounted as my video storage (/dev/md2). Next, I see that /dev/sda1 is my boot partition. Finally, there is a filesystem defined for shared memory, though I am not concerned about saving the contents of that as it is RAM.

How It Works

Partimage backs up filesystems that are not mounted. But partimage is started from a bootable rescue disk, like Knoppix or SysRescCd. The twist here is that I am using RAID partitions. Thus, when I boot with one of these CDs, I will need to assemble my RAID drives in order to have a source to backup (my root filesystem) and a destination to write to (my /mnt/videos filesystem). Partimage will not use a mounted filesystem as a source, but I will need to mount the destination.

Assembling My RAID Drives

I have forgotten the configuration of my RAID drives, so I look at /etc/mdadm.conf to figure out what partitions and UUIDs make up my two RAID sets:

[mule@ogre ~]# cat /etc/mdadm.conf

# mdadm.conf written out by anaconda

DEVICE partitions

MAILADDR root

ARRAY /dev/md0 level=raid0 num-devices=2 uuid=c0d4b597:c33b3014:ab694cee:76920165

ARRAY /dev/md2 level=raid1 num-devices=2 UUID=1705b387:1c71d83e:364b60b4:fb0cc192

This tells me that my root partition (/dev/md0) is a stripe set (RAID0) and that the storage for my important stuff, all my videos in /mnt/video is mirrored (RAID1) in case of a failure. I'm glad I built the system this way, as I like the performance benefits of a stripe set for my root partition, but I would consider it tragic if I lost all my work. Therefore, I've mirrored the video drive on two drives in case of a failure.

Video Display Problem with Linux and NVidia Card

For some reason I have not figured out, I cannot see virtual consoles once I exit Gnome. This is due to some incompatibility between the NVidia 8800GT card and my Dell SC1430. This also effects the display when I boot with either Knoppix or SysRescCD. Using these tools, the screen goes black and I can't see any terminal sessions or virtual consoles. Therefore, in order to use the boot cd, I removed the NVidia card and booted using Dell's ATI ES1000 onboard video.

Booting with Knoppix

Once Knoppix is fully booted, I need to assemble my two RAID partitions. You can use either use the UUID or the super-minor number of each RAID set to do this. I chose the super-minor, as it was simpler.

Assemble the Source RAID set

Here I am assembling the source drive, my root filesystem:

root@Knoppix:/ramdisk/home/knoppix# modprobe md

root@Knoppix:/ramdisk/home/knoppix# mdadm --assemble -m 0 /dev/md0

Mount the Destination Partition

Since I want to store the backup image on the same mirrored drive set that holds my videos, I'll mount that partition as the destination for the partimage. Of course, I first have to create the mount point:

mkdir /mnt/videos

mount -t ext3 /dev/md2 /mnt/videos

Run Partimage to Backup Root Partition

I'll need three things to run partimage:

-an assembled RAID set of the source, my root/boot partitions, unmounted

-an assembled RAID set of the destination, mounted

-a compression method

Here's the partimage process:

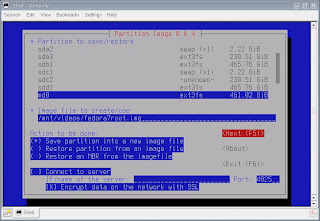

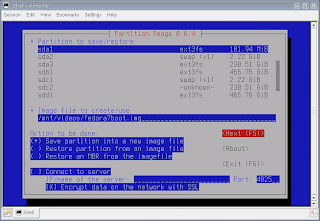

1) Select the partition to save and give the backup a destination and name. Note that the "Save partition into an image file" is selected as the default behavior:

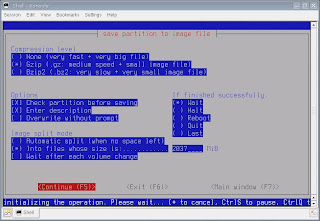

2) Select a compression method:

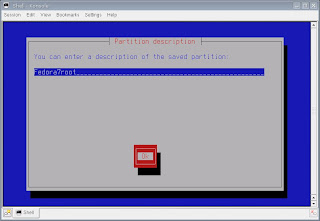

3) Give the backup image a description (optional):

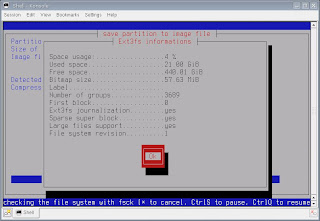

4) Partimage takes a few minutes to gather information about large (500GB+ drives), but then displays basic information about the partition to be backed up:

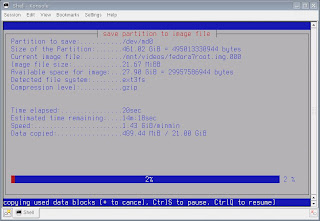

5) Partimage starts the imaging process. I had about 6GB to backup:

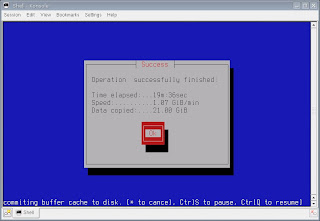

6) Partimage took about 20 minutes to create the backup image:

Backup complete. The restore process is similar, but instead of backing up an image file as in Step 1 above, you'll choose the "Restore Partition from an image file" option.

Run Partimage for Boot Partition

Since my boot partition is small 128MB, creating a backup image shouldn't take very long. My boot partition is /dev/sda1

Now I should be ready to an upgrade to Fedora 9. One hurdle I already see: the Fedora 9 installation doesn't recognize pre-existing RAID sets. Yarg. Looks like I might have to blow away the existing stripe set that is home to my root partition. Let you know how that goes.

Update 11/17/2008

The Fedora 9 x86-64 install went well. Here are some of the natty details:

http://crazedmuleproductions.blogspot.com/2008/11/fedora-9-x86-64-install.html

end update

Good day,

The Mule

References

mdadm man page