H264 encode

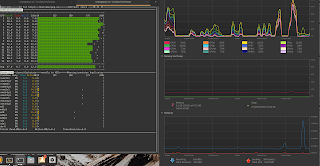

In the picture below, you can see that the H264 maxes out my CPU at 92% while I transcode a 2.7K GoPro video. Also, final FPS of the encode is 23:

Now here is the encode using the Maxwell class CPU on the GTX 970. CPU utilization is down to 15% and my FPS goes from 20 to 38! Impressive!

Encoding Parameters

The params are be a bit different between the two, but I think they might be good enough for a comparison.

H264

ffmpeg -i GOPR0099.MP4 -profile:v high -preset medium -vcodec libx264 -acodec copy output2.mp4

NVenc

ffmpeg -i GOPR0099.MP4 -c:v nvenc -acodec copy nvenc.mp4

Update 12/14/22

Compare these parameters:

FFMPEG_PRESET="-c:v libx264 -f mp4 -pix_fmt yuv420p -profile:v baseline -preset slow "

FFMPEG_PRESET="-c:v h264_nvenc -f mp4 -pix_fmt yuv420p -preset p2 -tune hq -b:v 5M -bufsize 5M -maxrate 10M -qmin 0 -g 250 -bf 3 -b_ref_mode middle -temporal-aq 1 -rc-lookahead 20 -i_qfactor 0.75 -b_qfactor 1.1"

Nvenc encode is roughly 327 fps and half the time of H264. The small CPU spikes on the right that are 1/2 the length of the tall ones.

Actual timing boost is impressive, from 44s to 14s

Regular libx264

real 0m44.101s

user 9m51.905s

sys 0m5.016s

H264_nvenc

frame= 5898 fps=417 q=22.0 size= 126976kB time=00:03:18.14 bitrate=5249.7kbits/s dup=1 drop=0 speed= 14x No more output streams to write to, finishing.

real 0m14.730s

user 1m27.067s

sys 0m1.804s

real 0m44.101s

user 9m51.905s

sys 0m5.016s

H264_nvenc

frame= 5898 fps=417 q=22.0 size= 126976kB time=00:03:18.14 bitrate=5249.7kbits/s dup=1 drop=0 speed= 14x No more output streams to write to, finishing.

real 0m14.730s

user 1m27.067s

sys 0m1.804s

Of course, scaling functions need special tweaks to command line that I haven't figured out and interpolate commands are still extremely slow.

Custom command used:

reset;/home/sodo/scripts/reviewCut/renderEdit.sh 20221105.ts 1 200 2 5 "Blump" E

reset;/home/sodo/scripts/reviewCut/renderEdit.sh 20221105.ts 1 200 2 5 "Blump" E

Reference

https://developer.nvidia.com/nvidia-video-codec-sdk

https://trac.ffmpeg.org/wiki/HWAccelIntro

http://developer.download.nvidia.com/compute/redist/ffmpeg/1511-patch/FFMPEG-with-NVIDIA-Acceleration-on-Ubuntu_UG_v01.pdf

http://ubuntuforums.org/showthread.php?t=2265485

https://www.phoronix.com/scan.php?page=news_item&px=MTg0NTY

https://developer.nvidia.com/nvidia-video-codec-sdk

https://trac.ffmpeg.org/wiki/HWAccelIntro

http://developer.download.nvidia.com/compute/redist/ffmpeg/1511-patch/FFMPEG-with-NVIDIA-Acceleration-on-Ubuntu_UG_v01.pdf

http://ubuntuforums.org/showthread.php?t=2265485

https://www.phoronix.com/scan.php?page=news_item&px=MTg0NTY